Linux Kernel Hugepage Allocation Flow Analysis

Overview

Your program creates file-backed hugepages (5 pages × 2MB = 10MB) using hugetlbfs. Here’s the detailed kernel flow:

1. File Creation Phase (open() syscall)

When your program calls open("/mnt/huge/test_file", O_CREAT | O_RDWR, 0755):

User Space: open() syscall

↓

Kernel VFS Layer: sys_open() ↓

HugetlbFS: hugetlbfs_create()

↓

HugetlbFS: hugetlbfs_get_inode()

↓

HugetlbFS: hugetlbfs_create()

↓

HugetlbFS: hugetlbfs_get_inode()

Kernel Log Evidence:

[384.353754] HUGETLBFS_CREATE: Creating file 'test_file' in hugetlbfs

[384.353758] HUGETLBFS_INODE: Creating new inode, mode=0x81ed

[384.353760] HUGETLBFS_INODE: Successfully created inode 16079

2. Memory Mapping Phase (mmap() syscall)

When your program calls mmap():

User Space: mmap() syscall

↓

Kernel MM: sys_mmap()

↓

Kernel MM: do_mmap_pgoff()

↓

HugetlbFS: hugetlbfs_file_mmap()

↓

Hugepage Core: hugetlb_reserve_pages()

What hugetlb_reserve_pages() does:

- Calculates needed pages:

to - from = 5 pages - Checks available hugepages in pool

- Reserves 5 pages from the global pool

- Updates reservation counters

Kernel Log Evidence:

[384.353806] HUGETLB_TRACE: hugetlbfs_file_mmap() START - file=test_file, vma_size=10485760, vm_flags=0xfb

[384.353808] HUGETLB_TRACE: hugetlb_reserve_pages() from=0, to=5, needed=5

[384.353809] HUGETLB_TRACE: hstate info - hugepage_size=2097152, free_hugepages=10

[384.353810] HUGETLB_TRACE: hugetlb_acct_memory() - delta=5

[384.353813] HUGETLB_TRACE: hugetlbfs_file_mmap() SUCCESS - vma=[0xb6a00000-0xb7400000]

Key Points:

- VMA (Virtual Memory Area) created:

0xb6a00000-0xb7400000(10MB range) - 5 hugepages reserved from pool of 10 available

- No physical pages allocated yet (lazy allocation)

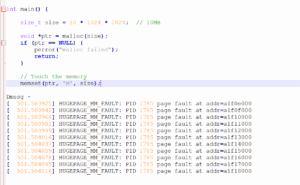

3. Memory Access Phase (memset() call)

When your program calls memset(ptr, 'H', size):

Each 2MB access triggers a page fault:

User Access: memset() touches first 2MB

↓

CPU: Page Fault Exception

↓

Kernel MM: do_page_fault()

↓

Hugepage Core: hugetlb_fault()

↓

Hugepage Core: alloc_huge_page()

↓

Hugepage Core: dequeue_huge_page_vma()

First Page Fault (Address 0xb6a00000):

Kernel Log Evidence:

[384.353967] HUGETLB_TRACE: hugetlb_fault() START - addr=0xb6a00000, flags=0x29

[384.353969] HUGETLB_TRACE: alloc_huge_page() START - addr=0xb6a00000, avoid_reserve=0

[384.353970] HUGETLB_TRACE: hstate - free_pages=10, resv_pages=5

[384.353975] HUGEPAGE_DEQUEUE: PID 2385 got page f7b7d000 from node 0 pool

[384.353979] HUGETLB_TRACE: alloc_huge_page() SUCCESS - page=f7b7d000, pfn=507904

[384.353981] HUGETLB_TRACE: vma_commit_reservation() - addr=0xb6a00000

What happens:

- Fault Detection: No PTE exists for virtual address

0xb6a00000 - Page Allocation: Gets physical page

f7b7d000(PFN 507904) - Pool Updates:

free_pages: 10→9, resv_pages: 5→4 - Reservation Commit: Converts reservation to actual allocation

- PTE Creation: Maps virtual

0xb6a00000→ physicalf7b7d000

This pattern repeats for all 5 pages:

Complete Allocation Sequence:

| Page | Virtual Addr | Physical Page | PFN | Pool State |

|---|---|---|---|---|

| 1 | 0xb6a00000 | f7b7d000 | 507904 | free=9, resv=4 |

| 2 | 0xb6c00000 | f7b89000 | 509440 | free=8, resv=3 |

| 3 | 0xb6e00000 | f7b85000 | 508928 | free=7, resv=2 |

| 4 | 0xb7000000 | f7b91000 | 510464 | free=6, resv=1 |

| 5 | 0xb7200000 | f7b8d000 | 509952 | free=5, resv=0 |

4. Memory Cleanup Phase (munmap() syscall)

When your program calls munmap():

User Space: munmap() syscall

↓

Kernel MM: sys_munmap()

↓

Hugepage Core: hugetlb_vm_op_close()

↓

Hugepage Core: free_huge_page() (for each page)

↓

Hugepage Core: hugetlb_unreserve_pages()

Kernel Log Evidence:

[386.287718] HUGEPAGE_VMA_CLOSE: PID 2385 closing hugepage VMA

[386.287722] HUGETLB_TRACE: vma_close() - vma=[0xb6a00000-0xb7400000], flags=0x44007b

[386.298546] HUGETLB_TRACE: free_huge_page() - page=f7b7d000, pfn=507904

[386.298553] HUGETLB_TRACE: after free - free_pages=6

Cleanup Process:

- VMA Destruction: Virtual memory area unmapped

- Physical Page Release: Each hugepage returned to free pool

- Pool Recovery:

free_pages: 5→6→7→8→9→10(back to original) - Reservation Cleanup: All reservations released

Memory Layout During Execution

Virtual Memory Layout:

┌─────────────────┬─────────────────┬─────────────────┬─────────────────┬─────────────────┐

│ 0xb6a00000 │ 0xb6c00000 │ 0xb6e00000 │ 0xb7000000 │ 0xb7200000 │

│ 2MB │ 2MB │ 2MB │ 2MB │ 2MB │

│ (Page 1) │ (Page 2) │ (Page 3) │ (Page 4) │ (Page 5) │

└─────────────────┴─────────────────┴─────────────────┴─────────────────┴─────────────────┘

↓ ↓ ↓ ↓ ↓

Physical Memory:

┌─────────────────┬─────────────────┬─────────────────┬─────────────────┬─────────────────┐

│ f7b7d000 │ f7b89000 │ f7b85000 │ f7b91000 │ f7b8d000 │

│ PFN 507904 │ PFN 509440 │ PFN 508928 │ PFN 510464 │ PFN 509952 │

└─────────────────┴─────────────────┴─────────────────┴─────────────────┴─────────────────┘

Key Kernel Data Structures

HugePage State (hstate):

struct hstate {

unsigned long nr_huge_pages; // Total hugepages in system

unsigned long free_huge_pages; // Available for allocation

unsigned long resv_huge_pages; // Reserved but not allocated

unsigned long surplus_huge_pages; // Beyond configured limit

// ... more fields

};

Virtual Memory Area (VMA):

struct vm_area_struct {

unsigned long vm_start; // 0xb6a00000

unsigned long vm_end; // 0xb7400000

unsigned long vm_flags; // VM_HUGETLB | VM_SHARED | ...

struct file *vm_file; // Points to hugetlbfs file

// ... more fields

};

Performance Implications

Benefits of Hugepages:

- Reduced TLB Pressure: 5 TLB entries instead of 2560 (10MB/4KB)

- Fewer Page Faults: 5 faults instead of 2560

- Better Cache Performance: Larger contiguous memory regions

Memory Usage:

- Before:

free_hugepages=10(20MB available) - During:

free_hugepages=5(10MB used, 10MB available) - After:

free_hugepages=10(fully recovered)

program demonstrates the complete lifecycle of file-backed hugepage allocation:

- File Creation: Creates inode in hugetlbfs

- Memory Mapping: Reserves 5×2MB pages, creates VMA

- Lazy Allocation: Physical pages allocated on first access via page faults

- Memory Usage: All 10MB filled with ‘H’ characters

- Cleanup: Pages returned to kernel pool, reservations released

The kernel efficiently manages this through the hugepage subsystem, maintaining separate pools for different page sizes and handling the complex interaction between virtual memory management, filesystem operations, and physical memory allocation.