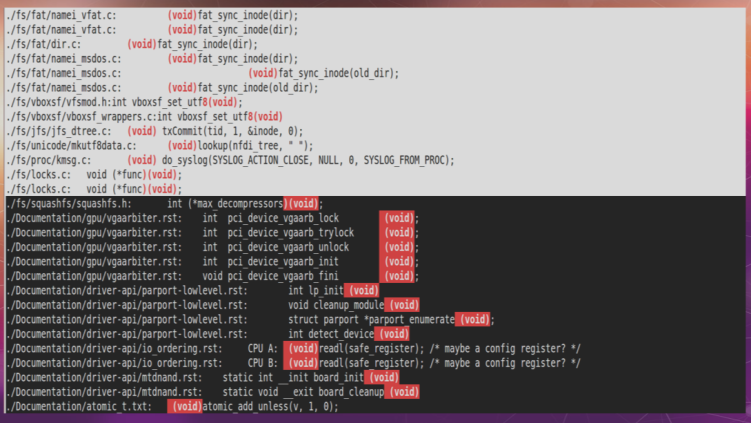

Mastering the use of `void` in C functions is crucial for embedded engineers at all levels. Here are key interview questions and what interviewers expect:

𝐈𝐧𝐭𝐞𝐫𝐯𝐢𝐞𝐰 𝐐𝐮𝐞𝐬𝐭𝐢𝐨𝐧𝐬 & 𝐄𝐱𝐩𝐞𝐜𝐭𝐞𝐝 𝐊𝐧𝐨𝐰𝐥𝐞𝐝𝐠𝐞

1. 𝐂𝐨𝐝𝐞 𝐑𝐞𝐯𝐢𝐞𝐰 𝐐𝐮𝐞𝐬𝐭𝐢𝐨𝐧:

void cleanup_system(void) {

close(fd);

pthread_mutex_unlock(&mutex);

free(ptr);

}

𝐐𝐮𝐞𝐬𝐭𝐢𝐨𝐧: “What’s wrong with this cleanup function? How would you improve it with proper void usage?”

𝐈𝐧𝐭𝐞𝐫𝐯𝐢𝐞𝐰𝐞𝐫 𝐄𝐱𝐩𝐞𝐜𝐭𝐬:

– Recognition that close() and pthread_mutex_unlock() return values need handling

– Knowledge of (void) casting for intentionally ignored returns

– Understanding that free() doesn’t need casting as it returns void

– Proper error handling or explicit ignoring with (void)

2. 𝐅𝐮𝐧𝐜𝐭𝐢𝐨𝐧 𝐈𝐦𝐩𝐥𝐞𝐦𝐞𝐧𝐭𝐚𝐭𝐢𝐨𝐧 𝐐𝐮𝐞𝐬𝐭𝐢𝐨𝐧:

void register_callback(void (*handler)(void));

𝐐𝐮𝐞𝐬𝐭𝐢𝐨𝐧: “Implement both the callback function and registration handler that would match this prototype.”

𝐈𝐧𝐭𝐞𝐫𝐯𝐢𝐞𝐰𝐞𝐫 𝐄𝐱𝐩𝐞𝐜𝐭𝐬:

– Proper function pointer syntax

– Function taking no parameters (void)

– NULL pointer checking in implementation

– Clear understanding of callback mechanisms

3. 𝐄𝐫𝐫𝐨𝐫 𝐇𝐚𝐧𝐝𝐥𝐢𝐧𝐠 𝐐𝐮𝐞𝐬𝐭𝐢𝐨𝐧:

int mutex_lock(void);

int mutex_unlock(void);

void critical_section(void) {

mutex_lock();

// … some operations

mutex_unlock();

}

𝐐𝐮𝐞𝐬𝐭𝐢𝐨𝐧: “This code has two issues related to void usage. Identify and fix them.”

𝐈𝐧𝐭𝐞𝐫𝐯𝐢𝐞𝐰𝐞𝐫 𝐄𝐱𝐩𝐞𝐜𝐭𝐬:

– Recognition that lock() return value must be checked

– Understanding that unlock() can be cast to (void)

– Proper error handling implementation

– Knowledge of critical section safety

4. 𝐅𝐮𝐧𝐜𝐭𝐢𝐨𝐧 𝐃𝐞𝐜𝐥𝐚𝐫𝐚𝐭𝐢𝐨𝐧 𝐐𝐮𝐞𝐬𝐭𝐢𝐨𝐧:

void process_data() { … }

void process_data(void) { … }

(void)process_data();

𝐐𝐮𝐞𝐬𝐭𝐢𝐨𝐧: “Explain the differences between these three uses of void.”

𝐈𝐧𝐭𝐞𝐫𝐯𝐢𝐞𝐰𝐞𝐫 𝐄𝐱𝐩𝐞𝐜𝐭𝐬:

– Understanding of K&R vs modern C style

– Knowledge of parameter safety differences

– Recognition of return value casting purpose

– Clear explanation of when to use each

5. 𝐒𝐲𝐬𝐭𝐞𝐦 𝐃𝐞𝐬𝐢𝐠𝐧 𝐐𝐮𝐞𝐬𝐭𝐢𝐨𝐧:

𝐐𝐮𝐞𝐬𝐭𝐢𝐨𝐧: “Implement a thread-safe cleanup function that:

– Takes no parameters

– Ignores cleanup operation return values safely

– Follows modern C coding standards”

𝐈𝐧𝐭𝐞𝐫𝐯𝐢𝐞𝐰𝐞𝐫 𝐄𝐱𝐩𝐞𝐜𝐭𝐬:

– Proper (void) parameter usage

– Explicit return value handling

– Thread-safety implementation

– Modern C coding standards compliance

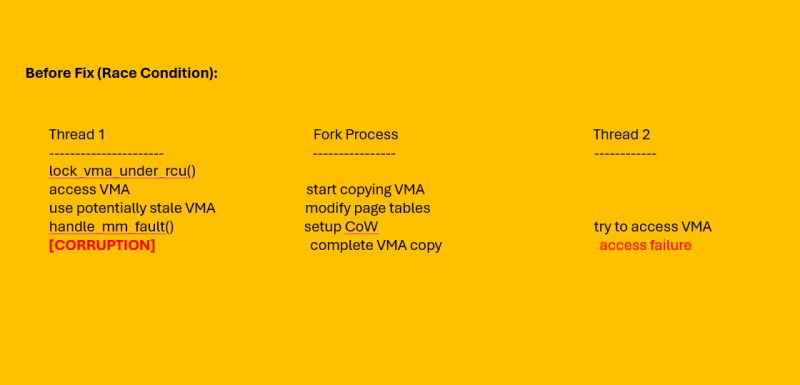

1. 𝐋𝐨𝐜𝐤𝐢𝐧𝐠 𝐚𝐧𝐝 𝐑𝐚𝐜𝐞 𝐂𝐨𝐧𝐝𝐢𝐭𝐢𝐨𝐧𝐬

𝐑𝐚𝐜𝐞 𝐂𝐨𝐧𝐝𝐢𝐭𝐢𝐨𝐧𝐬:

▪️ A race condition occurs when multiple threads/processes access shared resources simultaneously without proper synchronization.

▪️ Can lead to data corruption, inconsistent states, and unpredictable behavior.

Example of a Race Condition:

Thread 1 Thread 2

——– ——–

read value X=5

read value X=5

increment X=6

increment X=6

write back X

write back X

// X is incremented only once instead of twice!

𝐓𝐲𝐩𝐞𝐬 𝐨𝐟 𝐋𝐨𝐜𝐤𝐬 𝐢𝐧 𝐋𝐢𝐧𝐮𝐱:

Mutex

Spinlock

RW Semaphore

RCU (Read-Copy-Update)

Sequence Lock

2. 𝐅𝐨𝐫𝐤 𝐎𝐩𝐞𝐫𝐚𝐭𝐢𝐨𝐧 𝐢𝐧 𝐋𝐢𝐧𝐮𝐱

𝐖𝐡𝐚𝐭 𝐢𝐬 𝐟𝐨𝐫𝐤()?

▪️ Creates a new process by duplicating the calling process

▪️ Child process gets a copy of parent’s memory space

▪️ Copy-on-Write (CoW) optimization used

𝐅𝐨𝐫𝐤 𝐒𝐭𝐞𝐩𝐬 :

Create new task structure

Copy process credentials

Create new memory descriptor

Copy page tables

Copy VMAs (Virtual Memory Areas)

Setup CoW mechanisms

Copy file descriptors

Copy other resources

𝐊𝐞𝐲 𝐂𝐨𝐝𝐞 𝐏𝐚𝐭𝐡:

sys_fork()

→ kernel_clone()

→ copy_mm()

→ dup_mm()

→ dup_mmap() // 𝐕𝐌𝐀 𝐜𝐨𝐩𝐲𝐢𝐧𝐠 𝐡𝐚𝐩𝐩𝐞𝐧𝐬

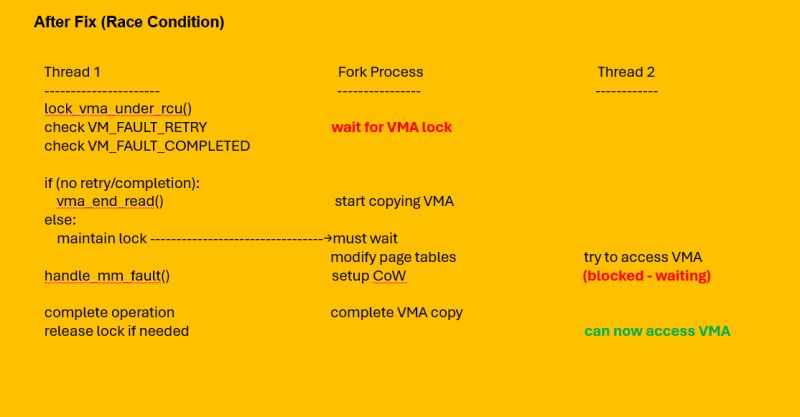

3. 𝐕𝐌𝐀 𝐑𝐚𝐜𝐞 𝐂𝐨𝐧𝐝𝐢𝐭𝐢𝐨𝐧 𝐚𝐧𝐝 𝐅𝐢𝐱

𝐕𝐢𝐫𝐭𝐮𝐚𝐥 𝐌𝐞𝐦𝐨𝐫𝐲 𝐀𝐫𝐞𝐚𝐬 (𝐕𝐌𝐀𝐬):

▪️ Represent contiguous virtual memory regions

▪️ Contain permissions, flags, and mapping information

▪️ Managed in mm_struct of each process

▪️ The Race Condition:

▪️ Problem scenario during fork:

𝐂𝐨𝐝𝐞 (𝐰𝐢𝐭𝐡 𝐫𝐚𝐜𝐞, 𝐢𝐧 𝐦𝐮𝐥𝐭𝐢𝐭𝐡𝐫𝐞𝐚𝐝 𝐬𝐜𝐞𝐧𝐚𝐫𝐢𝐨 ) –

vma = lock_vma_under_rcu(mm, address);

fault = handle_mm_fault(vma, address, flags);

vma_end_read(vma); // Release too early

if (!(fault & VM_FAULT_RETRY)) {

// Check conditions after release

}

𝐅𝐢𝐱𝐞𝐝 𝐜𝐨𝐝𝐞 –

vma = lock_vma_under_rcu(mm, address);

fault = handle_mm_fault(vma, address, flags);

if (!(fault & (VM_FAULT_RETRY | VM_FAULT_COMPLETED)))

vma_end_read(vma); // Only release if no retry/completion needed

𝐑𝐞𝐩𝐫𝐨𝐝𝐮𝐜𝐞𝐫 𝐏𝐫𝐨𝐠𝐫𝐚𝐦:

// Example of problematic code pattern

for (i = 0; i != 2; i += 1)

clone(&thread, &stacks[i] + 1, CLONE_THREAD | CLONE_VM | CLONE_SIGHAND, NULL);

while (1) {

if (fork() == 0) _exit(0);

(void)wait(NULL);

}

Sharing insights from our latest technical session Linux kernel device configuration! We explored the intricate world of hardware-software interaction in Linux systems.

🎯 𝐊𝐞𝐲 𝐓𝐞𝐜𝐡𝐧𝐢𝐜𝐚𝐥 𝐈𝐧𝐬𝐢𝐠𝐡𝐭𝐬:

Architecture-Specific Approaches:

x86 platforms leverage ACPI tables through BIOS

ARM systems utilize Device Tree mechanism

Modern hardware can support both simultaneously.

𝐃𝐫𝐢𝐯𝐞𝐫 𝐈𝐧𝐭𝐞𝐥𝐥𝐢𝐠𝐞𝐧𝐜𝐞:

Dual match tables (OF_MATCH_TABLE & ACPI_MATCH_TABLE)

Unique device fingerprinting through compatible fields

Cross-architecture support capabilities

𝐁𝐨𝐨𝐭𝐥𝐨𝐚𝐝𝐞𝐫 𝐌𝐚𝐠𝐢𝐜:

Dynamic hardware detection

Intelligent Device Tree blob selection

Register R2 utilization in ARM (evolved from historical ATAG in R3)

💡 𝐌𝐨𝐬𝐭 𝐈𝐧𝐭𝐞𝐫𝐞𝐬𝐭𝐢𝐧𝐠 𝐃𝐢𝐬𝐜𝐨𝐯𝐞𝐫𝐲:

Some modern drivers can handle both ACPI and Device Tree configurations simultaneously, enabling seamless cross-architecture compatibility!

🔍 𝐓𝐞𝐜𝐡𝐧𝐢𝐜𝐚𝐥 𝐃𝐞𝐞𝐩-𝐃𝐢𝐯𝐞 𝐅𝐥𝐨𝐰:

𝐃𝐞𝐯𝐢𝐜𝐞 𝐓𝐫𝐞𝐞: DTS → DTC → DTB → Bootloader → Memory → Kernel → Device Instance.

𝐀𝐂𝐏𝐈: BIOS → ACPI Tables → Kernel → Device Configuration.

Want to dive deeper ? Check out our webinar here:

https://lnkd.in/gQVvhbAw

𝐋𝐞𝐚𝐫𝐧𝐢𝐧𝐠 𝐎𝐩𝐩𝐨𝐫𝐭𝐮𝐧𝐢𝐭𝐢𝐞𝐬:

____________________________

𝐒𝐞𝐥𝐟-𝐏𝐚𝐜𝐞𝐝 𝐂𝐨𝐮𝐫𝐬𝐞:

Learn at your own pace

Structured kernel programming modules

Practical examples, bug study

Hands-on debugging experience

𝐂𝐥𝐚𝐬𝐬𝐫𝐨𝐨𝐦 𝐓𝐫𝐚𝐢𝐧𝐢𝐧𝐠 𝐟𝐨𝐫 𝐅𝐫𝐞𝐬𝐡𝐞𝐫𝐬:

6 Months intensive program

Placement support

Real-world bug analysis

Kernel development fundamentals

Live projects & case studies

𝐖𝐞𝐞𝐤𝐞𝐧𝐝 𝐎𝐧𝐥𝐢𝐧𝐞 𝐂𝐨𝐮𝐫𝐬𝐞 𝐟𝐨𝐫 𝐖𝐨𝐫𝐤𝐢𝐧𝐠 𝐏𝐫𝐨𝐟𝐞𝐬𝐬𝐢𝐨𝐧𝐚𝐥𝐬:

180+ Hours of training

8 Months comprehensive program

Flexible for working professionals

📽 𝐘𝐨𝐮𝐓𝐮𝐛𝐞 𝐜𝐡𝐚𝐧𝐧𝐞𝐥

https://lnkd.in/eYyNEqp

𝐌𝐨𝐝𝐮𝐥𝐞𝐬 𝐂𝐨𝐯𝐞𝐫𝐞𝐝 –

1) System Programming + Live project. (35Hrs.)

2) Linux kernel internals + Live project ( 35 Hrs.)

3) Linux device driver (35 Hrs.)

4) Linux socket programming (30 Hrs.)

5) Linux network device driver, PCI, USB driver code walk through, Linux

crash analysis and Kdump (30 Hrs.)

7) JTag debugging ( 7 Hrs. )

– 𝐏𝐫𝐢𝐜𝐢𝐧𝐠 :

https://lnkd.in/ePEK2pJh

In cybersecurity, Return-Oriented Programming (ROP) and Jump-Oriented Programming (JOP) are techniques that allow attackers to hijack a program’s control flow. These attacks reuse existing code snippets (gadgets) instead of injecting new code, making them harder to detect and defend against.

𝐑𝐞𝐭𝐮𝐫𝐧-𝐎𝐫𝐢𝐞𝐧𝐭𝐞𝐝 𝐏𝐫𝐨𝐠𝐫𝐚𝐦𝐦𝐢𝐧𝐠 (𝐑𝐎𝐏):

ROP exploits buffer overflow vulnerabilities to overwrite a function’s return address, redirecting execution to gadgets in memory.

𝐉𝐮𝐦𝐩-𝐎𝐫𝐢𝐞𝐧𝐭𝐞𝐝 𝐏𝐫𝐨𝐠𝐫𝐚𝐦𝐦𝐢𝐧𝐠 (𝐉𝐎𝐏)

JOP is similar but targets indirect jumps instead of returns, making it more versatile than ROP.

These attacks bypass traditional defenses like DEP (Data Execution Prevention) and ASLR (Address Space Layout Randomization), requiring more advanced protection techniques.

🔐 𝐏𝐫𝐨𝐭𝐞𝐜𝐭𝐢𝐧𝐠 𝐀𝐠𝐚𝐢𝐧𝐬𝐭 𝐑𝐎𝐏 𝐚𝐧𝐝 𝐉𝐎𝐏 🔐

1️⃣ Control-Flow Integrity (CFI):

CFI ensures that function calls and jumps in a program only occur at valid, predefined locations, preventing attacks like ROP and JOP.

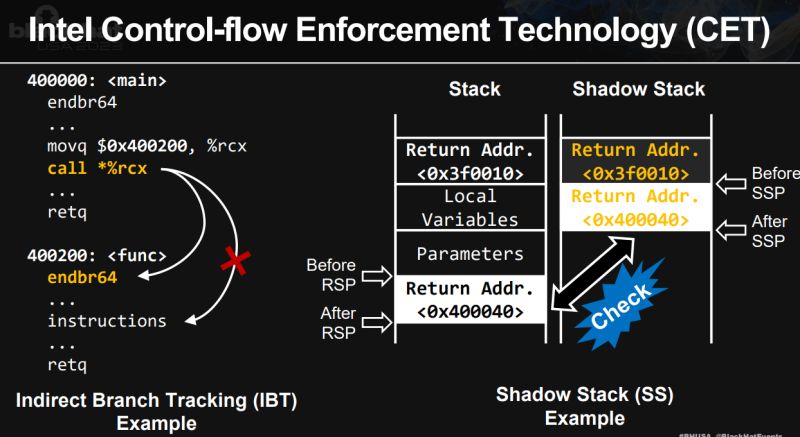

2️⃣ Intel Control-Flow Enforcement Technology (CET):

Intel’s CET is a hardware-based defense with two key components:

Indirect Branch Tracking (IBT): Ensures indirect branches target valid locations marked with ENDBR instructions.

𝐒𝐡𝐚𝐝𝐨𝐰 𝐒𝐭𝐚𝐜𝐤 (𝐒𝐒): Verifies return addresses match expected values.

CET offers strong protection with minimal performance overhead.

hashtag#KernelConfiguration

CONFIG_X86_IBT=y # Enable Intel IBT

CONFIG_CPU_UNRET_ENTRY=y # Enable CET shadow stack

hashtag#Forx86

CONFIG_X86_IBT=y # Intel IBT

CONFIG_X86_KERNEL_IBT=y # Kernel IBT support

3️⃣ Clang/LLVM kCFI:

For environments lacking hardware support, Clang/LLVM kCFI provides software-based protection by adding control-flow checks at compile time.

CONFIG_CFI_CLANG=y # Enable Clang CFI

CONFIG_CFI_PERMISSIVE=n # Strict mode (not permissive)

CONFIG_CFI_CLANG_SHADOW=y # Enable shadow call stack

⚠️ 𝐖𝐞𝐚𝐤𝐧𝐞𝐬𝐬𝐞𝐬 𝐢𝐧 𝐊𝐞𝐫𝐧𝐞𝐥 𝐂𝐅𝐈 𝐃𝐞𝐟𝐞𝐧𝐬𝐞𝐬 – 𝐏𝐎𝐏 ⚠️

While CFI, Intel CET, and Clang/LLVM kCFI offer strong protections, 𝐏𝐚𝐠𝐞-𝐎𝐫𝐢𝐞𝐧𝐭𝐞𝐝 𝐏𝐫𝐨𝐠𝐫𝐚𝐦𝐦𝐢𝐧𝐠 (𝐏𝐎𝐏) 𝐛𝐲𝐩𝐚𝐬𝐬𝐞𝐬 𝐭𝐡𝐞𝐬𝐞 𝐝𝐞𝐟𝐞𝐧𝐬𝐞𝐬. POP exploits writable page tables in kernel memory, allowing attackers to remap pages and create new control flows using legitimate code within the kernel. This makes it harder for current CFI mechanisms to detect or block the exploit.

For more info – https://shorturl.at/tNKml

𝐒𝐜𝐞𝐧𝐚𝐫𝐢𝐨 1: Memory Management – Process Memory Access 🔴

𝐃𝐞𝐬𝐢𝐠𝐧 𝐑𝐞𝐪𝐮𝐢𝐫𝐞𝐦𝐞𝐧𝐭: You’re implementing UserfaultFD functionality that needs to access another process’s memory mapping to zero-fill pages. The target process might exit while you’re accessing its memory descriptor.

𝐘𝐨𝐮𝐫 𝐭𝐚𝐬𝐤: Access the process’s mm_struct (memory descriptor) safely, perform memory operations like page allocation and mapping, then clean up properly.

𝐂𝐫𝐢𝐭𝐢𝐜𝐚𝐥 𝐜𝐨𝐧𝐬𝐭𝐫𝐚𝐢𝐧𝐭: The target process can exit at any moment, potentially freeing the mm_struct while you’re using it.

𝐐𝐮𝐞𝐬𝐭𝐢𝐨𝐧: Which pattern would you choose to safely acquire a reference to the target process’s mm_struct?

•𝐀) 𝐓𝐞𝐬𝐭-𝐚𝐧𝐝-𝐈𝐧𝐜𝐫𝐞𝐦𝐞𝐧𝐭: Check if the mm_struct is still valid (mm_users > 0), then increment mm_users reference count if safe

•𝐁) 𝐈𝐧𝐜𝐫𝐞𝐦𝐞𝐧𝐭-𝐚𝐧𝐝-𝐓𝐞𝐬𝐭: Increment the mm_struct’s mm_users reference count first, then check if the mm_struct is still valid

++++++++++++++++++++++

Scenario 1 (Memory Management): Cross-process safety:

// 𝐖𝐑𝐎𝐍𝐆 𝐎𝐩𝐭𝐢𝐨𝐧 𝐀 – 𝐃𝐞𝐚𝐝𝐥𝐲 𝐫𝐚𝐜𝐞 𝐜𝐨𝐧𝐝𝐢𝐭𝐢𝐨𝐧

if (atomic_read(&mm->mm_users) > 0) { // ← Process can exit HERE

atomic_inc(&mm->mm_users); // ← Too late! mm_struct already freed

// CRASH – accessing freed memory

}

// 𝐂𝐎𝐑𝐑𝐄𝐂𝐓 𝐎𝐩𝐭𝐢𝐨𝐧 𝐁 – 𝐀𝐭𝐨𝐦𝐢𝐜 𝐫𝐞𝐟𝐞𝐫𝐞𝐧𝐜𝐞 𝐚𝐜𝐪𝐮𝐢𝐬𝐢𝐭𝐢𝐨𝐧

if (atomic_inc_not_zero(&mm->mm_users)) {

// Successfully got reference, mm_struct is safe to use

handle_userfault(mm); // ← Safe cross-process access

mmput(mm); // ← Release when done

} else {

return -ESRCH; // ← Process already exiting

}

Cross-process safety with counter-intuitive atomic primitive

For more refer the attachment.

𝐂𝐡𝐚𝐥𝐥𝐞𝐧𝐠𝐞 𝐲𝐨𝐮𝐫𝐬𝐞𝐥𝐟: Can you identify why Test-and-Increment fails in the other 6 scenarios? Download the complete challenge set to test your kernel concurrency knowledge!

𝐋𝐞𝐚𝐫𝐧𝐢𝐧𝐠 𝐎𝐩𝐩𝐨𝐫𝐭𝐮𝐧𝐢𝐭𝐢𝐞𝐬:

___________________________

A) 𝐒𝐞𝐥𝐟-𝐏𝐚𝐜𝐞𝐝 𝐂𝐨𝐮𝐫𝐬𝐞:

Learn at your own pace

Structured kernel programming modules

Practical examples, bug study

Hands-on debugging experience

B) 𝐖𝐞𝐞𝐤𝐞𝐧𝐝 𝐎𝐧𝐥𝐢𝐧𝐞 𝐂𝐨𝐮𝐫𝐬𝐞 𝐟𝐨𝐫 𝐖𝐨𝐫𝐤𝐢𝐧𝐠 𝐏𝐫𝐨𝐟𝐞𝐬𝐬𝐢𝐨𝐧𝐚𝐥𝐬:

180+ Hours of training

8 Months comprehensive program

Flexible for working professionals

– 𝐏𝐫𝐢𝐜𝐢𝐧𝐠 :

https://lnkd.in/ePEK2pJh

Choosing the wrong atomic pattern turns “safe” code into production crashes.

𝐏𝐚𝐭𝐭𝐞𝐫𝐧 1: 𝐓𝐞𝐬𝐭-𝐚𝐧𝐝-𝐈𝐧𝐜𝐫𝐞𝐦𝐞𝐧𝐭

// Check condition first, then modify if safe

if (counter > 0) { // ← Test phase

atomic_inc(&counter); // ← Increment phase

// proceed with operation

}

𝐏𝐚𝐭𝐭𝐞𝐫𝐧 2: 𝐈𝐧𝐜𝐫𝐞𝐦𝐞𝐧𝐭-𝐚𝐧𝐝-𝐓𝐞𝐬𝐭

// Modify first, then check the result

int new_val = atomic_inc_return(&counter); // ← Increment phase

if (new_val == 1) { // ← Test phase

// handle state transition

}

𝐖𝐡𝐞𝐧 𝐭𝐨 𝐔𝐬𝐞 𝐄𝐚𝐜𝐡 𝐏𝐚𝐭𝐭𝐞𝐫𝐧 –

𝐔𝐬𝐞 𝐓𝐞𝐬𝐭-𝐚𝐧𝐝-𝐈𝐧𝐜𝐫𝐞𝐦𝐞𝐧𝐭 𝐖𝐡𝐞𝐧:

1. Pure Monitoring Operations

// Waiting for some condition without participating

while (atomic_read(&oom_victims) > 0) { // ← Just observing

schedule_timeout_interruptible(HZ/10);

}

disable_oom_killer();

Why it works: You’re not modifying the thing you’re monitoring

2. Pre-validation Required

// When you need to validate before expensive operations

if (user_has_permission() && resource_available()) {

atomic_inc(&active_users);

start_expensive_operation();

}

Why it works: Validation logic happens before any state changes

3. Error-Prone Rollback Scenarios

// When rollback is complex or impossible

if (can_allocate_resources()) { // ← Check first

atomic_inc(&resource_users);

allocate_complex_resources(); // ← Hard to undo

}

Why it works: Avoids partial state that’s hard to clean up

𝐔𝐬𝐞 𝐈𝐧𝐜𝐫𝐞𝐦𝐞𝐧𝐭-𝐚𝐧𝐝-𝐓𝐞𝐬𝐭 𝐖𝐡𝐞𝐧:

1. Reference Counting Safety

// Protecting against concurrent destruction

if (atomic_inc_not_zero(&obj->ref_count)) {

use_object_safely(obj); // ← Object can’t be freed

atomic_dec(&obj->ref_count);

} else {

return -ENODEV; // ← Object being destroyed

}

Why it’s critical: Prevents use-after-free in concurrent destruction

2. State Transition Detection

// Detecting meaningful state changes

int users = atomic_inc_return(&active_users);

if (users == 1) { // ← First user: enable features

enable_expensive_hardware();

}

// Later, on close:

users = atomic_dec_return(&active_users);

if (users == 0) { // ← Last user: disable features

disable_expensive_hardware();

}

Why it’s superior: Atomic detection of 0→1 and 1→0 transitions

3. Resource Limit Enforcement

// Atomic limit checking with rollback capability

int active = atomic_inc_return(&active_dumps);

if (active > MAX_DUMPS) {

atomic_dec(&active_dumps); // ← Easy rollback

return -EAGAIN; // ← Reject excess

}

create_core_dump(); // ← Safe to proceed

Why it’s reliable: No race between check and increment

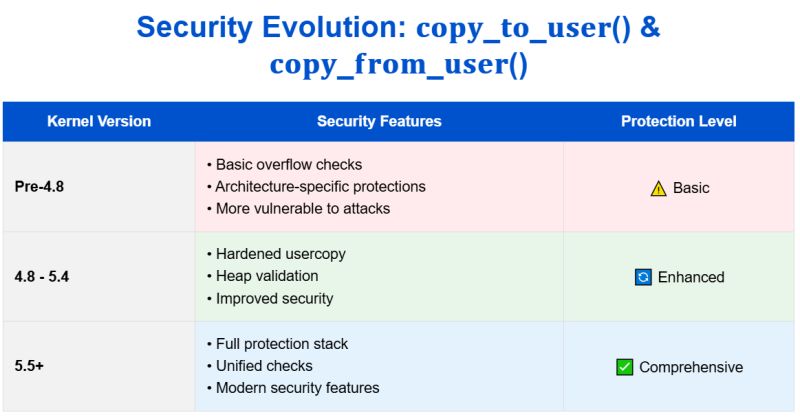

Every kernel developer, from beginner to expert, will encounter these fundamental functions. Handling one of the most critical operations in Linux: secure data transfer between user space and kernel space.

copy_from_user() // Userspace → Kernel

copy_to_user() // Kernel → Userspace

1. 2002: The Early Days – Simple but Dangerous (Linux 2.4)

static __inline__ unsigned long copy_from_user(void *to, const void *from, unsigned long n)

{

if (access_ok(VERIFY_READ, from, n))

__do_copy_from_user(to, from, n);

else

memzero(to, n); // Dangerous with negative n!

return n;

}

Why Changed:

– Initial attempt at memory safety

– Could wipe gigabytes with negative numbers

– No size validation

2. 2005: First Size Check (Linux 2.6)

static inline int copy_from_user(…) {

if (unlikely(n > INT_MAX))

BUG(); // Hard stop for large sizes

return __copy_from_user(to, from, n);

}

Why Changed:

– Added overflow protection

– Prevented large buffer attacks

3. 2009: Compiler-Assisted Protection (Linux 2.6)

static inline unsigned long copy_from_user(void *to, const void __user *from, unsigned long n)

{

int sz = __compiletime_object_size(to);

int ret = -EFAULT;

if (likely(sz == -1 || sz >= n))

ret = _copy_from_user(to, from, n);

else

WARN(1, “Buffer overflow detected!\n”);

return ret;

}

Why Changed:

– Added compile-time checks

– Buffer overflow detection

– x86 architecture improvements

4. 2013: copy_to_user Enhancement (Linux 3.13)

static inline unsigned long copy_to_user(void __user *to, const void *from, unsigned long n)

{

int sz = __compiletime_object_size(from);

might_fault();

if (likely(sz < 0 || sz >= n))

n = _copy_to_user(to, from, n);

else if(__builtin_constant_p(n))

copy_to_user_overflow();

else

__copy_to_user_overflow(sz, n);

return n;

}

Why Changed:

– Symmetric protection for data output

– Prevented kernel data leaks

– Better compile-time validations

5. 2016: The Hardening Revolution (Linux 4.8)

static __always_inline bool check_copy_size(const void *addr, size_t bytes, bool is_source)

{

int sz = __compiletime_object_size(addr);

if (unlikely(sz >= 0 && sz < bytes)) {

if (!__builtin_constant_p(bytes))

copy_overflow(sz, bytes);

return false;

}

check_object_size(addr, bytes, is_source);

return true;

}

Why Changed:

– Complete heap validation

– Object bounds checking

– Architecture-independent security

6. 2019: Modern Protection (Linux 5.5)

static __always_inline bool check_copy_size(const void *addr, size_t bytes, bool is_source)

{

// Previous checks plus:

if (WARN_ON_ONCE(bytes > INT_MAX))

return false;

check_object_size(addr, bytes, is_source);

return true;

}

For more Info –

https://lnkd.in/gG6WrCCV

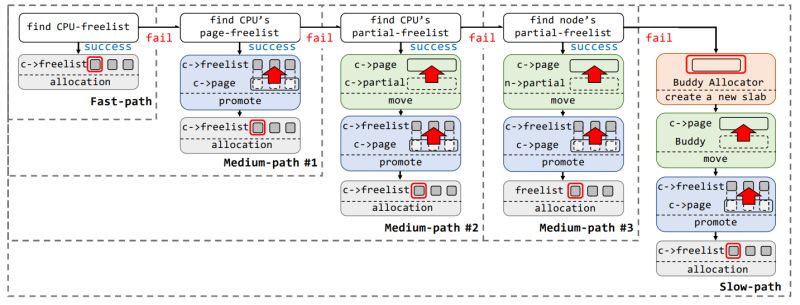

How the Linux kernel efficiently manages millions of small object allocations? Let’s dive deep into the SLUB (Simple List of Used Blocks) allocator, the 𝐛𝐚𝐜𝐤𝐛𝐨𝐧𝐞 𝐨𝐟 𝐋𝐢𝐧𝐮𝐱 𝐤𝐞𝐫𝐧𝐞𝐥 𝐦𝐞𝐦𝐨𝐫𝐲 𝐦𝐚𝐧𝐚𝐠𝐞𝐦𝐞𝐧𝐭! 🐧

𝐓𝐞𝐜𝐡𝐧𝐢𝐜𝐚𝐥 𝐃𝐞𝐞𝐩-𝐃𝐢𝐯𝐞:

𝐂𝐨𝐫𝐞 𝐀𝐫𝐜𝐡𝐢𝐭𝐞𝐜𝐭𝐮𝐫𝐞 🏗️

The SLUB allocator implements a sophisticated multi-tiered strategy:

𝐀𝐥𝐥𝐨𝐜𝐚𝐭𝐢𝐨𝐧 𝐏𝐚𝐭𝐡𝐬

🚀 Fast-path (Primary):

if (likely(freelist)) {

return freelist; // Direct hit: ~10-20 cycles

}

⚡ Medium-paths:

– CPU page-freelist

– Partial lists

– Node-level operations

– Typical overhead: 100-500 cycles

🔄 Slow-path (Fallback):

– Buddy allocator interaction

– Full page allocation

– Slab initialization

– Cost: 1000+ cycles

2. 𝐀𝐝𝐯𝐚𝐧𝐜𝐞𝐝 𝐅𝐞𝐚𝐭𝐮𝐫𝐞𝐬 🛠️

▪️ Memory Organization:

struct kmem_cache {

struct kmem_cache_cpu __percpu *cpu_slab;

unsigned long flags;

int size; // The size of objects

int object_size; // Aligned object size

int offset; // Free pointer offset

};

▪️ Security Mechanisms:

🛡️ Protection Features:

– Freelist pointer encryption

– Memory poisoning (0xA5)

– Red-zoning for overflow detection

– FreeList randomization

3. 𝐃𝐞𝐛𝐮𝐠𝐠𝐢𝐧𝐠 & 𝐌𝐨𝐧𝐢𝐭𝐨𝐫𝐢𝐧𝐠 🔍

▪️ Runtime Analysis:

Enable debugging

echo 1 > /sys/kernel/slab/kmalloc-1024/trace

Monitor statistics

cat /proc/slabinfo

▪️ 𝐏𝐞𝐫𝐟𝐨𝐫𝐦𝐚𝐧𝐜𝐞 𝐓𝐨𝐨𝐥𝐬:

– kmem_cache_flags

– slabinfo statistics

– Memory leak tracking

– Allocation pattern analysis

4. 𝐑𝐞𝐚𝐥-𝐖𝐨𝐫𝐥𝐝 𝐀𝐩𝐩𝐥𝐢𝐜𝐚𝐭𝐢𝐨𝐧𝐬 💻

▪️ Common Use Cases:

– Network packet buffers (skbuff)

– File system caches (dentry, inode)

– Process descriptors (task_struct)

– Device driver allocations

▪️ Performance Impact:

– Critical path optimization

– Cache-line alignment

– NUMA awareness

– Memory fragmentation prevention

5. 𝐁𝐞𝐬𝐭 𝐏𝐫𝐚𝐜𝐭𝐢𝐜𝐞𝐬 & 𝐓𝐢𝐩𝐬 📌

▪️ Development Guidelines:

– Align object sizes to cache lines

– Use appropriate GFP flags

– Implement proper error handling

– Consider NUMA topology

▪️ Troubleshooting:

– Memory leak detection

– Fragmentation analysis

– Performance profiling

– Debug flag usage

💡𝐓𝐢𝐩𝐬:

1. Use SLAB_HWCACHE_ALIGN for hot paths

2. Implement bulk allocation for better performance

3. Consider using per-CPU caches for high-frequency allocations

4. Monitor partial lists for fragmentation

𝐑𝐞𝐚𝐥-𝐖𝐨𝐫𝐥𝐝 𝐈𝐦𝐩𝐚𝐜𝐭:

– Critical for container orchestration

– Essential for high-performance networking

– Fundamental to filesystem performance

– Key to system stability

Ever wondered how to execute code before main() or perform cleanup after program exit in C? Let’s explore GCC’s powerful constructor and destructor attributes!

𝐊𝐞𝐲 𝐓𝐞𝐜𝐡𝐧𝐢𝐜𝐚𝐥 𝐈𝐧𝐬𝐢𝐠𝐡𝐭𝐬:

1. Section Control:

– Use __attribute__((constructor)) and __attribute__((destructor)) to define functions that run before/after main()

– Create custom sections using __attribute__((section(“.init”))) for specialized initialization

2. 𝐄𝐱𝐞𝐜𝐮𝐭𝐢𝐨𝐧 𝐎𝐫𝐝𝐞𝐫:

📊 Priority Sequence:

– .init section (highest priority)

– Constructor functions (by priority)

– main()

– Destructor functions (reverse priority)

– .fini section

3. 𝐏𝐫𝐢𝐨𝐫𝐢𝐭𝐲 𝐌𝐚𝐧𝐚𝐠𝐞𝐦𝐞𝐧𝐭:

– Range: 0-100 reserved for system use

– Custom priorities should use values >100

– Example: __attribute__((constructor(101))) for user-defined ordering

4. 𝐏𝐫𝐚𝐜𝐭𝐢𝐜𝐚𝐥 𝐀𝐩𝐩𝐥𝐢𝐜𝐚𝐭𝐢𝐨𝐧𝐬:

– Library initialization/cleanup

– Resource management

– Plugin architectures

– Dynamic module loading

– Global state setup/teardown

💡 Pro Tip: You can combine these with static initialization to create powerful startup sequences and guarantee cleanup, similar to C++ constructors but with more fine-grained control.

𝐂𝐡𝐞𝐜𝐤 𝐨𝐮𝐭 𝐨𝐮𝐫 𝐯𝐢𝐝𝐞𝐨 𝐬𝐞𝐬𝐬𝐢𝐨𝐧 𝐡𝐞𝐫𝐞:

https://lnkd.in/gUrX2bSw

𝐄𝐱𝐚𝐦𝐩𝐥𝐞 𝐂𝐨𝐝𝐞 𝐒𝐧𝐢𝐩𝐩𝐞𝐭:

“`c

__attribute__((constructor(101)))

void early_init() {

// Called before main with high priority

}

__attribute__((section(“.init”)))

void very_early_init() {

// Called even before constructors

}

__attribute__((destructor(102)))

void cleanup() {

// Guaranteed to run on program exit

}

Sharing insights from our latest technical session Linux kernel device configuration! We explored the intricate world of hardware-software interaction in Linux systems.

🎯 𝐊𝐞𝐲 𝐓𝐞𝐜𝐡𝐧𝐢𝐜𝐚𝐥 𝐈𝐧𝐬𝐢𝐠𝐡𝐭𝐬:

Architecture-Specific Approaches:

x86 platforms leverage ACPI tables through BIOS

ARM systems utilize Device Tree mechanism

Modern hardware can support both simultaneously.

𝐃𝐫𝐢𝐯𝐞𝐫 𝐈𝐧𝐭𝐞𝐥𝐥𝐢𝐠𝐞𝐧𝐜𝐞:

Dual match tables (OF_MATCH_TABLE & ACPI_MATCH_TABLE)

Unique device fingerprinting through compatible fields

Cross-architecture support capabilities

𝐁𝐨𝐨𝐭𝐥𝐨𝐚𝐝𝐞𝐫 𝐌𝐚𝐠𝐢𝐜:

Dynamic hardware detection

Intelligent Device Tree blob selection

Register R2 utilization in ARM (evolved from historical ATAG in R3)

💡 𝐌𝐨𝐬𝐭 𝐈𝐧𝐭𝐞𝐫𝐞𝐬𝐭𝐢𝐧𝐠 𝐃𝐢𝐬𝐜𝐨𝐯𝐞𝐫𝐲:

Some modern drivers can handle both ACPI and Device Tree configurations simultaneously, enabling seamless cross-architecture compatibility!

🔍 𝐓𝐞𝐜𝐡𝐧𝐢𝐜𝐚𝐥 𝐃𝐞𝐞𝐩-𝐃𝐢𝐯𝐞 𝐅𝐥𝐨𝐰:

𝐃𝐞𝐯𝐢𝐜𝐞 𝐓𝐫𝐞𝐞: DTS → DTC → DTB → Bootloader → Memory → Kernel → Device Instance.

𝐀𝐂𝐏𝐈: BIOS → ACPI Tables → Kernel → Device Configuration.

Want to dive deeper ? Check out our webinar here:

https://lnkd.in/gQVvhbAw

𝐋𝐞𝐚𝐫𝐧𝐢𝐧𝐠 𝐎𝐩𝐩𝐨𝐫𝐭𝐮𝐧𝐢𝐭𝐢𝐞𝐬:

𝐒𝐞𝐥𝐟-𝐏𝐚𝐜𝐞𝐝 𝐂𝐨𝐮𝐫𝐬𝐞:

Learn at your own pace

Structured kernel programming modules

Practical examples, bug study

Hands-on debugging experience

𝐂𝐥𝐚𝐬𝐬𝐫𝐨𝐨𝐦 𝐓𝐫𝐚𝐢𝐧𝐢𝐧𝐠 𝐟𝐨𝐫 𝐅𝐫𝐞𝐬𝐡𝐞𝐫𝐬:

6 Months intensive program

Placement support

Real-world bug analysis

Kernel development fundamentals

Live projects & case studies

𝐖𝐞𝐞𝐤𝐞𝐧𝐝 𝐎𝐧𝐥𝐢𝐧𝐞 𝐂𝐨𝐮𝐫𝐬𝐞 𝐟𝐨𝐫 𝐖𝐨𝐫𝐤𝐢𝐧𝐠 𝐏𝐫𝐨𝐟𝐞𝐬𝐬𝐢𝐨𝐧𝐚𝐥𝐬:

180+ Hours of training

8 Months comprehensive program

Flexible for working professionals

📽 𝐘𝐨𝐮𝐓𝐮𝐛𝐞 𝐜𝐡𝐚𝐧𝐧𝐞𝐥

https://lnkd.in/eYyNEqp

𝐌𝐨𝐝𝐮𝐥𝐞𝐬 𝐂𝐨𝐯𝐞𝐫𝐞𝐝 –

1) System Programming + Live project. (35Hrs.)

2) Linux kernel internals + Live project ( 35 Hrs.)

3) Linux device driver (35 Hrs.)

4) Linux socket programming (30 Hrs.)

5) Linux network device driver, PCI, USB driver code walk through, Linux

crash analysis and Kdump (30 Hrs.)

7) JTag debugging ( 7 Hrs. )

– 𝐏𝐫𝐢𝐜𝐢𝐧𝐠 :

https://lnkd.in/ePEK2pJh

Looking for the best full stack course to kickstart your tech career? You’re in the right place. Full stack developers are among the most sought-after professionals in today’s tech industry, with companies actively seeking versatile developers who can handle both front-end and back-end development.

In this comprehensive guide, we’ll explore the best full stack online courses available in 2024, with a special focus on programs offering job placement support. Whether you’re a beginner or an experienced developer looking to upskill, this guide will help you choose the perfect course for your career goals.

Before diving into the course options, here’s what to look for:

Expertifie offers one of the most comprehensive full stack development courses with both self-paced and live learning options. Their program stands out for its practical approach and strong focus on job placement.

Key Features:

Course Duration:

Course Fees: 75,000 INR

Best For: Both beginners and working professionals looking to transition into full stack development

Placement Support:

Scaler Academy offers a structured approach to learning full stack development with different tracks for varying experience levels.

Key Features:

Course Duration:9.5-11.5 months

Best For: Learners at all experience levels

Placement Support:

Udacity’s program focuses on practical skills with real-world projects and expert feedback.

Key Features:

Course Duration:4 months

Fees: $1,356 or $339/month

Placement Support:

Springboard offers a comprehensive bootcamp with a job guarantee.

Key Features:

Course Duration:9 months

Fees: $10,900 (upfront) or $1,211/month

Placement Support:

IBM’s program offers a comprehensive curriculum with a focus on cloud-native development.

Key Features:

Course Duration:4 months

Fees: $39/month

Placement Support:

Simplilearn’s program, in collaboration with Caltech CTME, offers comprehensive full stack training.

Key Features:

Course Duration:9 months

Fees: ₹99,999

Placement Support:

UpGrad’s intensive bootcamp focuses on practical skills and career readiness.

Key Features:

Course Duration:13 weeks

Fees: ₹99,000 + GST

Placement assistance:

Consider these factors when selecting your course:

Full stack developers can expect:

Investing in a full stack development course is a smart career move in 2024. With options ranging from self-paced learning to intensive bootcamps, there’s a program for every learning style and career goal. Expertifie’s comprehensive program stands out for its flexible learning options and strong placement support, making it an excellent choice for aspiring full stack developers.

Remember to carefully evaluate each course based on your specific needs, schedule, and career objectives. The right course will not only teach you the technical skills but also prepare you for a successful career in full stack development.

Would you like to learn more about any specific course or have questions about starting your full stack development journey? Feel free to reach out in the comments below!

We offer live online classes (₹85,000) on weekends and recorded video lectures (₹35,000 with lifetime access) - no offline classes currently.

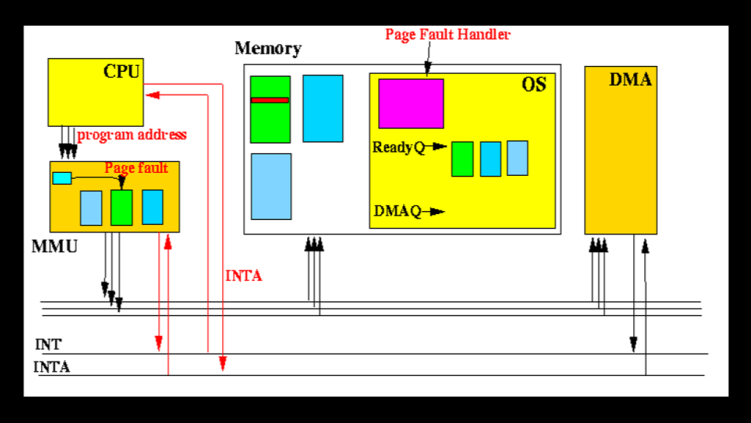

Ever wondered how Linux handles memory access in critical sections where even a millisecond of delay could spell disaster? Enter `pagefault_disabled`, a fascinating kernel feature that’s like a traffic controller for memory faults.

“𝐒𝐨𝐦𝐞𝐭𝐢𝐦𝐞𝐬 𝐭𝐡𝐞 𝐫𝐢𝐠𝐡𝐭 𝐭𝐡𝐢𝐧𝐠 𝐭𝐨 𝐝𝐨 𝐢𝐬 𝐭𝐨 𝐣𝐮𝐬𝐭 𝐟𝐚𝐢𝐥. 𝐇𝐚𝐯𝐢𝐧𝐠 𝐚 𝐩𝐚𝐠𝐞 𝐟𝐚𝐮𝐥𝐭 𝐡𝐚𝐧𝐝𝐥𝐞𝐫 𝐭𝐡𝐚𝐭 𝐭𝐫𝐢𝐞𝐬 𝐭𝐨 𝐛𝐞 𝐭𝐨𝐨 𝐜𝐥𝐞𝐯𝐞𝐫 𝐢𝐬 𝐰𝐨𝐫𝐬𝐞 𝐭𝐡𝐚𝐧 𝐨𝐧𝐞 𝐭𝐡𝐚𝐭 𝐣𝐮𝐬𝐭 𝐬𝐚𝐲𝐬 ‘𝐧𝐨’ 𝐪𝐮𝐢𝐜𝐤𝐥𝐲.”

Imagine you’re in the middle of handling a hardware interrupt, and suddenly you need to access some memory. What happens if that memory isn’t readily available? Normally, the kernel would happily pause, load the memory from disk, and continue. But in an interrupt handler? That would be catastrophic!

This is where `𝐜𝐮𝐫𝐫𝐞𝐧𝐭->𝐩𝐚𝐠𝐞𝐟𝐚𝐮𝐥𝐭_𝐝𝐢𝐬𝐚𝐛𝐥𝐞𝐝` comes in. It’s like putting up a “Do Not Disturb” sign for memory management. When enabled, it tells the kernel: “Don’t try to be helpful – if something goes wrong, fail fast!”

pagefault_disable(); // “Do Not Disturb” sign goes up

// Critical operation that can’t afford to sleep

pagefault_enable(); // Back to normal

“𝐓𝐡𝐞 𝐩𝐚𝐠𝐞𝐟𝐚𝐮𝐥𝐭_𝐝𝐢𝐬𝐚𝐛𝐥𝐞𝐝() 𝐦𝐞𝐜𝐡𝐚𝐧𝐢𝐬𝐦 𝐢𝐬 𝐨𝐧𝐞 𝐨𝐟 𝐭𝐡𝐨𝐬𝐞 𝐬𝐮𝐛𝐭𝐥𝐞 𝐲𝐞𝐭 𝐜𝐫𝐢𝐭𝐢𝐜𝐚𝐥 𝐟𝐞𝐚𝐭𝐮𝐫𝐞𝐬 𝐭𝐡𝐚𝐭 𝐦𝐚𝐤𝐞𝐬 𝐭𝐡𝐞 𝐝𝐢𝐟𝐟𝐞𝐫𝐞𝐧𝐜𝐞 𝐛𝐞𝐭𝐰𝐞𝐞𝐧 𝐚 𝐤𝐞𝐫𝐧𝐞𝐥 𝐭𝐡𝐚𝐭 𝐰𝐨𝐫𝐤𝐬 𝐚𝐧𝐝 𝐨𝐧𝐞 𝐭𝐡𝐚𝐭 𝐰𝐨𝐫𝐤𝐬 𝐫𝐞𝐥𝐢𝐚𝐛𝐥𝐲.”

It’s a simple counter in each task’s structure, but its impact is profound. With this mechanism, Linux can safely:

– Handle hardware interrupts

– Access device registers

– Perform atomic operations

– Manage critical sections

Want to dive deep into how this mechanism works? Check out the detailed technical explanation covers everything ( from x86 architecture specifics to real-world usage patterns. )

𝐑𝐞𝐦𝐞𝐦𝐛𝐞𝐫: 𝐈𝐧 𝐭𝐡𝐞 𝐤𝐞𝐫𝐧𝐞𝐥, 𝐬𝐨𝐦𝐞𝐭𝐢𝐦𝐞𝐬 𝐟𝐚𝐢𝐥𝐢𝐧𝐠 𝐟𝐚𝐬𝐭 𝐢𝐬 𝐛𝐞𝐭𝐭𝐞𝐫 𝐭𝐡𝐚𝐧 𝐭𝐫𝐲𝐢𝐧𝐠 𝐭𝐨𝐨 𝐡𝐚𝐫𝐝!